How To Download Folders From Jupyter Notebook

How to Utilize Jupyter Notebook in 2020: A Beginner's Tutorial

Published: August 24, 2020

What is Jupyter Notebook?

The Jupyter Notebook is an incredibly powerful tool for interactively developing and presenting data science projects. This commodity will walk y'all through how to apply Jupyter Notebooks for data science projects and how to set information technology up on your local motorcar.

First, though: what is a "notebook"?

A notebook integrates code and its output into a single document that combines visualizations, narrative text, mathematical equations, and other rich media. In other words: it'south a unmarried certificate where yous tin can run code, display the output, and also add explanations, formulas, charts, and make your piece of work more transparent, understandable, repeatable, and shareable.

Using Notebooks is now a major function of the data scientific discipline workflow at companies across the globe. If your goal is to work with data, using a Notebook will speed upward your workflow and make information technology easier to communicate and share your results.

Best of all, as function of the open source Project Jupyter, Jupyter Notebooks are completely free. Yous can download the software on its own, or as part of the Anaconda data science toolkit.

Although information technology is possible to apply many unlike programming languages in Jupyter Notebooks, this article will focus on Python, as it is the most common use case. (Among R users, R Studio tends to be a more popular choice).

How to Follow This Tutorial

To get the most out of this tutorial you lot should exist familiar with programming — Python and pandas specifically. That said, if you take feel with another linguistic communication, the Python in this commodity shouldn't be also cryptic, and will withal aid you get Jupyter Notebooks ready locally.

Jupyter Notebooks can also act equally a flexible platform for getting to grips with pandas and even Python, as will become credible in this tutorial.

We will:

- Comprehend the basics of installing Jupyter and creating your start notebook

- Delve deeper and learn all the important terminology

- Explore how hands notebooks can be shared and published online.

(In fact, this article was written as a Jupyter Notebook! It's published hither in read-only form, but this is a good example of how versatile notebooks can exist. In fact, virtually of our programming tutorials and even our Python courses were created using Jupyter Notebooks).

Instance Data Analysis in a Jupyter Notebook

First, we will walk through setup and a sample analysis to reply a real-life question. This will demonstrate how the menses of a notebook makes data science tasks more intuitive for us as nosotros work, and for others in one case it's time to share our piece of work.

So, permit'south say y'all're a data annotator and you've been tasked with finding out how the profits of the largest companies in the United states of america changed historically. You observe a data set of Fortune 500 companies spanning over l years since the list's first publication in 1955, put together from Fortune's public archive. Nosotros've gone ahead and created a CSV of the information you lot tin use hither.

Every bit nosotros shall demonstrate, Jupyter Notebooks are perfectly suited for this investigation. First, allow's go ahead and install Jupyter.

Installation

The easiest manner for a beginner to get started with Jupyter Notebooks is by installing Anaconda.

Anaconda is the most widely used Python distribution for data science and comes pre-loaded with all the most popular libraries and tools.

Some of the biggest Python libraries included in Anaconda include NumPy, pandas, and Matplotlib, though the total yard+ list is exhaustive.

Anaconda thus lets us hit the ground running with a fully stocked information scientific discipline workshop without the hassle of managing countless installations or worrying virtually dependencies and OS-specific (read: Windows-specific) installation bug.

To get Anaconda, simply:

- Download the latest version of Anaconda for Python 3.8.

- Install Anaconda by following the instructions on the download page and/or in the executable.

If yous are a more avant-garde user with Python already installed and prefer to manage your packages manually, you lot can simply use pip:

pip3 install jupyter Creating Your First Notebook

In this section, nosotros're going to learn to run and save notebooks, familiarize ourselves with their structure, and understand the interface. We'll become intimate with some core terminology that volition steer you towards a applied understanding of how to utilise Jupyter Notebooks by yourself and fix us up for the next section, which walks through an example data assay and brings everything nosotros learn here to life.

Running Jupyter

On Windows, yous tin can run Jupyter via the shortcut Anaconda adds to your start menu, which volition open a new tab in your default web browser that should look something like the following screenshot.

This isn't a notebook only yet, only don't panic! There'south not much to it. This is the Notebook Dashboard, specifically designed for managing your Jupyter Notebooks. Think of it as the launchpad for exploring, editing and creating your notebooks.

Be aware that the dashboard will give you access only to the files and sub-folders contained inside Jupyter'southward start-up directory (i.east., where Jupyter or Anaconda is installed). However, the start-up directory can be changed.

It is also possible to start the dashboard on whatsoever organization via the command prompt (or last on Unix systems) by entering the command jupyter notebook; in this example, the electric current working directory volition be the start-up directory.

With Jupyter Notebook open in your browser, yous may have noticed that the URL for the dashboard is something like https://localhost:8888/tree. Localhost is not a website, but indicates that the content is beingness served from yourlocal machine: your own figurer.

Jupyter's Notebooks and dashboard are web apps, and Jupyter starts up a local Python server to serve these apps to your web browser, making it essentially platform-independent and opening the door to easier sharing on the web.

(If you don't sympathize this yet, don't worry — the important point is just that although Jupyter Notebooks opens in your browser, it's existence hosted and run on your local machine. Your notebooks aren't actually on the spider web until you make up one's mind to share them.)

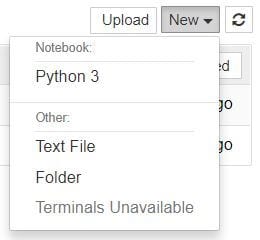

The dashboard's interface is mostly self-explanatory — though nosotros will come up back to it briefly afterwards. And then what are we waiting for? Browse to the binder in which you would like to create your first notebook, click the "New" drop-downwardly push in the pinnacle-correct and select "Python 3":

Hey presto, here nosotros are! Your first Jupyter Notebook will open in new tab — each notebook uses its own tab because you can open multiple notebooks simultaneously.

If you switch back to the dashboard, y'all will see the new file Untitled.ipynb and y'all should meet some greenish text that tells yous your notebook is running.

What is an ipynb File?

The short reply: each.ipynb file is one notebook, so each time you create a new notebook, a new.ipynb file will be created.

The longer answer: Each .ipynb file is a text file that describes the contents of your notebook in a format called JSON. Each cell and its contents, including paradigm attachments that have been converted into strings of text, is listed therein forth with some metadata.

You can edit this yourself — if you know what you lot are doing! — by selecting "Edit > Edit Notebook Metadata" from the carte bar in the notebook. You tin can also view the contents of your notebook files by selecting "Edit" from the controls on the dashboard

However, the key discussion there is can. In nearly cases, in that location's no reason y'all should ever demand to edit your notebook metadata manually.

The Notebook Interface

Now that y'all have an open notebook in front of you lot, its interface volition hopefully non wait entirely alien. Later on all, Jupyter is essentially simply an advanced word processor.

Why not take a look around? Check out the menus to get a feel for it, particularly take a few moments to ringlet downwardly the listing of commands in the control palette, which is the minor button with the keyboard icon (or Ctrl + Shift + P).

There are two fairly prominent terms that you should notice, which are probably new to y'all:cells andkernels are key both to understanding Jupyter and to what makes it more just a word processor. Fortunately, these concepts are not difficult to empathise.

- A kernel is a "computational engine" that executes the lawmaking contained in a notebook certificate.

- A jail cell is a container for text to be displayed in the notebook or code to be executed by the notebook's kernel.

Cells

We'll return to kernels a little later, but first allow'due south come to grips with cells. Cells form the body of a notebook. In the screenshot of a new notebook in the section above, that box with the green outline is an empty prison cell. There are two main cell types that we will embrace:

- Acode cell contains code to be executed in the kernel. When the lawmaking is run, the notebook displays the output below the code cell that generated it.

- AMarkdown prison cell contains text formatted using Markdown and displays its output in-place when the Markdown cell is run.

The starting time prison cell in a new notebook is always a code cell.

Let's test it out with a classic how-do-you-do earth instance: Type print('Hello World!') into the cell and click the run push in the toolbar higher up or press

in the toolbar higher up or pressCtrl + Enter.

The result should look similar this:

impress ( 'Hello World!' ) Howdy World! When we run the cell, its output is displayed below and the characterization to its left volition accept changed from In [ ] toIn [1].

The output of a code prison cell also forms office of the document, which is why y'all can see information technology in this article. You can e'er tell the departure between lawmaking and Markdown cells because code cells accept that label on the left and Markdown cells do not.

The "In" role of the characterization is simply short for "Input," while the label number indicates when the cell was executed on the kernel — in this instance the jail cell was executed first.

Run the prison cell once again and the label will change to In [ii] because now the prison cell was the second to exist run on the kernel. Information technology will get clearer why this is so useful subsequently when we have a closer look at kernels.

From the card bar, clickInsert and selectInsert Cell Below to create a new code cell underneath your first and try out the following lawmaking to see what happens. Do you notice anything different?

import time time.slumber( iii ) This jail cell doesn't produce any output, but it does take three seconds to execute. Notice how Jupyter signifies when the cell is currently running by changing its characterization to In [*].

In general, the output of a cell comes from any text data specifically printed during the prison cell's execution, as well every bit the value of the last line in the prison cell, exist information technology a lone variable, a function call, or something else. For case:

def say_hello (recipient) : render 'Hello, {}!' .format(recipient) say_hello( 'Tim' ) 'How-do-you-do, Tim!' You'll find yourself using this almost constantly in your ain projects, and nosotros'll see more of it afterward on.

Keyboard Shortcuts

I final thing you may have observed when running your cells is that their border turns bluish, whereas it was light-green while yous were editing. In a Jupyter Notebook, there is always i "active" prison cell highlighted with a border whose color denotes its current mode:

- Dark-green outline — prison cell is in "edit mode"

- Bluish outline — cell is in "command fashion"

So what can nosotros do to a cell when it's in command mode? So far, we have seen how to run a jail cell withCtrl + Enter, just there are plenty of other commands we tin utilise. The best way to use them is with keyboard shortcuts

Keyboard shortcuts are a very popular aspect of the Jupyter environment because they facilitate a speedy cell-based workflow. Many of these are deportment you can comport out on the active jail cell when information technology's in command mode.

Beneath, you lot'll find a list of some of Jupyter's keyboard shortcuts. You don't demand to memorize them all immediately, simply this list should give you a good idea of what'due south possible.

- Toggle between edit and command mode with

EscandEnter, respectively. - In one case in command mode:

- Ringlet up and down your cells with your

UpwardandDownkeys. - Press

AorBto insert a new cell above or below the agile prison cell. -

Gwill transform the active cell to a Markdown prison cell. -

Ywill set the agile prison cell to a lawmaking prison cell. -

D + D(Dtwice) will delete the active cell. -

Zwill undo cell deletion. - Hold

Shiftand pressUporDownwardlyto select multiple cells at once. With multiple cells selected,Shift + Mvolition merge your pick.

- Ringlet up and down your cells with your

-

Ctrl + Shift + -, in edit manner, will split the active cell at the cursor. - You can besides click and

Shift + Clickin the margin to the left of your cells to select them.

Go ahead and try these out in your own notebook. Once yous're ready, create a new Markdown cell and we'll learn how to format the text in our notebooks.

Markdown

Markdown is a lightweight, piece of cake to learn markup language for formatting manifestly text. Its syntax has a one-to-1 correspondence with HTML tags, then some prior knowledge here would be helpful but is definitely not a prerequisite.

Recall that this commodity was written in a Jupyter notebook, then all of the narrative text and images you have seen then far were achieved writing in Markdown. Let's encompass the nuts with a quick case:

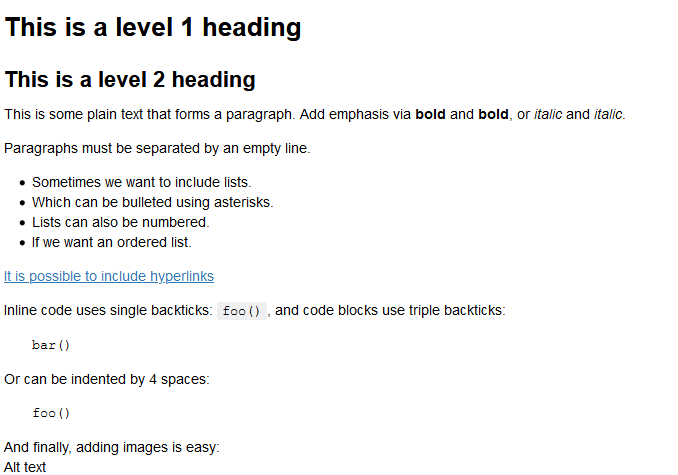

# This is a level one heading ## This is a level two heading This is some manifestly text that forms a paragraph. Add emphasis via **bold** and __bold__, or *italic* and _italic_. Paragraphs must be separated by an empty line. * Sometimes nosotros want to include lists. * Which tin be bulleted using asterisks. i. Lists tin likewise be numbered. 2. If we want an ordered list. [It is possible to include hyperlinks](https://www.example.com) Inline lawmaking uses unmarried backticks: `foo()`, and code blocks use triple backticks: ``` bar() ``` Or tin can be indented past 4 spaces: foo() And finally, calculation images is piece of cake:  Here's how that Markdown would expect in one case you run the jail cell to return it:

(Annotation that the alt text for the image is displayed hither because nosotros didn't actually apply a valid image URL in our example)

When attaching images, you have three options:

- Employ a URL to an image on the web.

- Employ a local URL to an image that you volition be keeping aslope your notebook, such equally in the same git repo.

- Add an attachment via "Edit > Insert Image"; this will convert the epitome into a string and shop it inside your notebook

.ipynbfile. Note that this will make your.ipynbfile much larger!

At that place is plenty more to Markdown, especially around hyperlinking, and it'due south also possible to simply include manifestly HTML. Once you find yourself pushing the limits of the basics to a higher place, you lot can refer to the official guide from Markdown's creator, John Gruber, on his website.

Kernels

Behind every notebook runs a kernel. When you lot run a code cell, that code is executed inside the kernel. Any output is returned back to the cell to exist displayed. The kernel's state persists over time and between cells — it pertains to the document every bit a whole and not individual cells.

For example, if you import libraries or declare variables in one cell, they will exist bachelor in another. Let'southward attempt this out to get a feel for it. First, we'll import a Python package and ascertain a function:

import numpy as np def square (ten) : render x * x Once we've executed the prison cell above, nosotros can reference np andsquare in whatever other jail cell.

10 = np.random.randint( 1 , x ) y = square(10) print ( '%d squared is %d' % (x, y) ) one squared is i This will work regardless of the club of the cells in your notebook. As long as a jail cell has been run, any variables you declared or libraries you imported will be available in other cells.

You tin endeavor it yourself, let'south print out our variables once again.

print ( 'Is %d squared %d?' % (x, y) ) Is ane squared 1? No surprises here! Just what happens if we change the value ofy?

y = 10 print ( 'Is %d squared is %d?' % (10, y) ) If we run the cell above, what do y'all call up would happen?

We will become an output like:Is 4 squared ten?. This is because once we've run they = 10 code cell, y is no longer equal to the square of x in the kernel.

Most of the fourth dimension when you create a notebook, the flow will exist top-to-bottom. But information technology's common to go back to make changes. When we do demand to brand changes to an earlier cell, the order of execution we can encounter on the left of each cell, such as In [6], can help united states diagnose problems by seeing what social club the cells have run in.

And if nosotros ever wish to reset things, there are several incredibly useful options from the Kernel menu:

- Restart: restarts the kernel, thus clearing all the variables etc that were defined.

- Restart & Clear Output: same equally above simply will likewise wipe the output displayed below your code cells.

- Restart & Run All: same as above only will also run all your cells in order from offset to last.

If your kernel is always stuck on a computation and you lot wish to stop it, yous can cull the Interrupt pick.

Choosing a Kernel

You may have noticed that Jupyter gives you the selection to change kernel, and in fact at that place are many dissimilar options to choose from. Back when y'all created a new notebook from the dashboard by selecting a Python version, you were actually choosing which kernel to apply.

In that location kernels for unlike versions of Python, and too for over 100 languages including Java, C, and fifty-fifty Fortran. Data scientists may exist particularly interested in the kernels for R and Julia, as well every bit both imatlab and the Calysto MATLAB Kernel for Matlab.

The SoS kernel provides multi-language back up within a single notebook.

Each kernel has its own installation instructions, but volition likely require you lot to run some commands on your calculator.

Instance Analysis

Now we've looked atwhat a Jupyter Notebook is, information technology'southward time to expect athow they're used in practice, which should requite u.s.a. clearer understanding of why they are and then popular.

It'south finally time to go started with that Fortune 500 data gear up mentioned earlier. Retrieve, our goal is to find out how the profits of the largest companies in the US changed historically.

Information technology'southward worth noting that everyone will develop their own preferences and mode, but the general principles even so apply. You can follow along with this section in your ain notebook if you wish, or use this equally a guide to creating your own approach.

Naming Your Notebooks

Earlier you lot start writing your projection, yous'll probably want to give information technology a meaningful name. file name Untitled in the upper left of the screen to enter a new file name, and hit the Save icon (which looks like a floppy deejay) below it to relieve.

Annotation that closing the notebook tab in your browser will non "shut" your notebook in the manner endmost a certificate in a traditional application volition. The notebook's kernel will go along to run in the groundwork and needs to be shut down before it is truly "closed" — though this is pretty handy if yous accidentally close your tab or browser!

If the kernel is shut down, you can close the tab without worrying most whether information technology is still running or not.

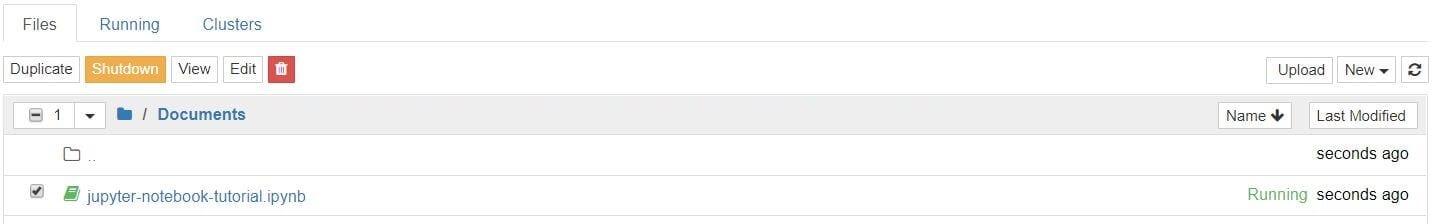

The easiest way to do this is to select "File > Close and Halt" from the notebook menu. Notwithstanding, you can as well shutdown the kernel either by going to "Kernel > Shutdown" from within the notebook app or by selecting the notebook in the dashboard and clicking "Shutdown" (see image below).

Setup

It's mutual to commencement off with a code cell specifically for imports and setup, so that if you choose to add or change annihilation, you tin simply edit and re-run the cell without causing whatever side-effects.

%matplotlib inline import pandas as pd import matplotlib.pyplot as plt import seaborn as sns sns.set(manner= "darkgrid" ) We'll import pandas to work with our data, Matplotlib to plot charts, and Seaborn to make our charts prettier. It'south likewise common to import NumPy but in this case, pandas imports it for us.

That start line isn't a Python command, simply uses something called a line magic to instruct Jupyter to capture Matplotlib plots and return them in the cell output. We'll talk a chip more most line magics later, and they're likewise covered in our advanced Jupyter Notebooks tutorial.

For now, let's become ahead and load our data.

df = pd.read_csv( 'fortune500.csv' ) It's sensible to besides do this in a unmarried cell, in case we need to reload it at whatever indicate.

Salvage and Checkpoint

Now we've got started, it's best practice to save regularly. PressingCtrl + S volition save our notebook by calling the "Save and Checkpoint" command, but what is this checkpoint thing?

Every time we create a new notebook, a checkpoint file is created forth with the notebook file. It is located within a hidden subdirectory of your save location called .ipynb_checkpoints and is besides a.ipynb file.

By default, Jupyter volition autosave your notebook every 120 seconds to this checkpoint file without altering your primary notebook file. When you "Save and Checkpoint," both the notebook and checkpoint files are updated. Hence, the checkpoint enables you to recover your unsaved work in the event of an unexpected issue.

You tin revert to the checkpoint from the menu via "File > Revert to Checkpoint."

Investigating Our Data Set

Now we're really rolling! Our notebook is safely saved and we've loaded our data fixdf into the most-used pandas data structure, which is chosen aDataFrame and basically looks like a table. What does ours look like?

df.head( ) | Year | Rank | Company | Acquirement (in millions) | Profit (in millions) | |

|---|---|---|---|---|---|

| 0 | 1955 | one | General Motors | 9823.5 | 806 |

| 1 | 1955 | 2 | Exxon Mobil | 5661.4 | 584.8 |

| 2 | 1955 | iii | U.Due south. Steel | 3250.iv | 195.4 |

| 3 | 1955 | 4 | Full general Electrical | 2959.ane | 212.6 |

| 4 | 1955 | 5 | Esmark | 2510.eight | 19.1 |

df.tail( ) | Year | Rank | Company | Acquirement (in millions) | Profit (in millions) | |

|---|---|---|---|---|---|

| 25495 | 2005 | 496 | Wm. Wrigley Jr. | 3648.6 | 493 |

| 25496 | 2005 | 497 | Peabody Energy | 3631.half-dozen | 175.4 |

| 25497 | 2005 | 498 | Wendy's International | 3630.iv | 57.8 |

| 25498 | 2005 | 499 | Kindred Healthcare | 3616.six | 70.6 |

| 25499 | 2005 | 500 | Cincinnati Financial | 3614.0 | 584 |

Looking skilful. We take the columns we demand, and each row corresponds to a single company in a unmarried year.

Let's simply rename those columns then we can refer to them later.

df.columns = [ 'year' , 'rank' , 'visitor' , 'acquirement' , 'profit' ] Next, we need to explore our information set. Is it consummate? Did pandas read it as expected? Are whatsoever values missing?

len(df) 25500 Okay, that looks good — that'southward 500 rows for every year from 1955 to 2005, inclusive.

Allow's check whether our data prepare has been imported as nosotros would look. A simple check is to see if the data types (or dtypes) have been correctly interpreted.

df.dtypes twelvemonth int64 rank int64 company object revenue float64 profit object dtype: object Uh oh. It looks like there's something wrong with the profits column — we would expect it to be afloat64 like the acquirement column. This indicates that it probably contains some non-integer values, so let'due south take a look.

non_numberic_profits = df.turn a profit.str.contains( '[^0-9.-]' ) df.loc[non_numberic_profits] .caput( ) | twelvemonth | rank | company | revenue | profit | |

|---|---|---|---|---|---|

| 228 | 1955 | 229 | Norton | 135.0 | N.A. |

| 290 | 1955 | 291 | Schlitz Brewing | 100.0 | North.A. |

| 294 | 1955 | 295 | Pacific Vegetable Oil | 97.9 | Northward.A. |

| 296 | 1955 | 297 | Liebmann Breweries | 96.0 | Due north.A. |

| 352 | 1955 | 353 | Minneapolis-Moline | 77.4 | N.A. |

Just equally we suspected! Some of the values are strings, which accept been used to bespeak missing data. Are there any other values that have crept in?

set(df.profit[non_numberic_profits] ) {'N.A.'} That makes it like shooting fish in a barrel to translate, just what should we practice? Well, that depends how many values are missing.

len(df.profit[non_numberic_profits] ) 369 It'south a pocket-sized fraction of our information set, though non completely inconsequential as it is still around 1.five%.

If rows containing N.A. are, roughly, uniformly distributed over the years, the easiest solution would merely be to remove them. Then allow's take a quick look at the distribution.

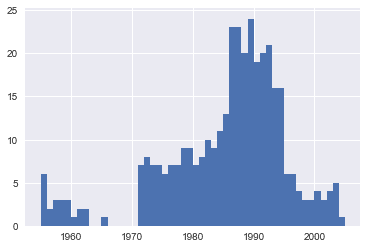

bin_sizes, _, _ = plt.hist(df.year[non_numberic_profits] , bins=range( 1955 , 2006 ) )

At a glance, nosotros can come across that the most invalid values in a single year is fewer than 25, and as there are 500 data points per year, removing these values would account for less than 4% of the data for the worst years. Indeed, other than a surge around the 90s, most years take fewer than half the missing values of the peak.

For our purposes, let's say this is acceptable and go ahead and remove these rows.

df = df.loc[ ~non_numberic_profits] df.profit = df.profit.apply(pd.to_numeric) We should check that worked.

len(df) 25131 df.dtypes year int64 rank int64 visitor object revenue float64 profit float64 dtype: object Great! We have finished our information set setup.

If we were going to present your notebook equally a report, we could get rid of the investigatory cells we created, which are included hither as a demonstration of the flow of working with notebooks, and merge relevant cells (see the Advanced Functionality section below for more on this) to create a single information set setup prison cell.

This would mean that if we ever mess up our data gear up elsewhere, nosotros tin just rerun the setup cell to restore it.

Plotting with matplotlib

Next, we can go to addressing the question at manus by plotting the boilerplate turn a profit past twelvemonth. We might as well plot the revenue as well, and then get-go nosotros can define some variables and a method to reduce our lawmaking.

group_by_year = df.loc[ : , [ 'year' , 'revenue' , 'turn a profit' ] ] .groupby( 'year' ) avgs = group_by_year.mean( ) x = avgs.index y1 = avgs.profit def plot (x, y, ax, title, y_label) : ax.set_title(title) ax.set_ylabel(y_label) ax.plot(10, y) ax.margins(ten= 0 , y= 0 ) Now let's plot!

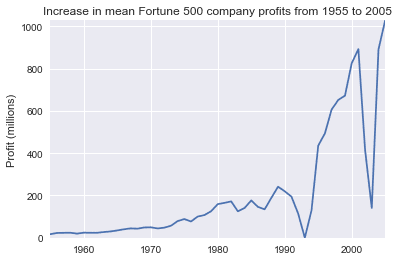

fig, ax = plt.subplots( ) plot(x, y1, ax, 'Increase in mean Fortune 500 company profits from 1955 to 2005' , 'Profit (millions)' )

Wow, that looks similar an exponential, but information technology's got some huge dips. They must correspond to the early 1990s recession and the dot-com bubble. Information technology's pretty interesting to run into that in the information. But how come profits recovered to even higher levels post each recession?

Perhaps the revenues can tell us more.

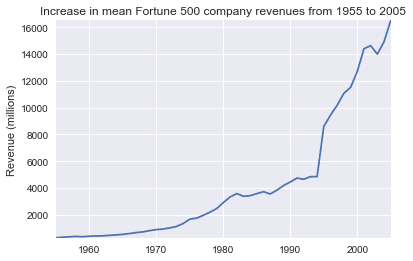

y2 = avgs.revenue fig, ax = plt.subplots( ) plot(x, y2, ax, 'Increment in hateful Fortune 500 company revenues from 1955 to 2005' , 'Revenue (millions)' )

That adds another side to the story. Revenues were non as desperately hitting — that'south some neat accounting work from the finance departments.

With a little aid from Stack Overflow, we can superimpose these plots with +/- their standard deviations.

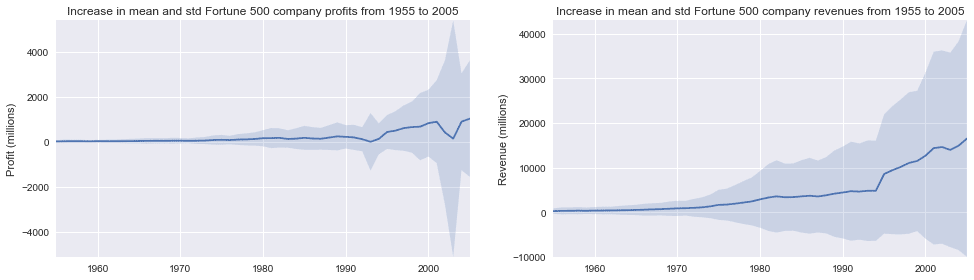

def plot_with_std (10, y, stds, ax, championship, y_label) : ax.fill_between(x, y - stds, y + stds, alpha= 0.ii ) plot(10, y, ax, title, y_label) fig, (ax1, ax2) = plt.subplots(ncols= two ) title = 'Increase in mean and std Fortune 500 visitor %southward from 1955 to 2005' stds1 = group_by_year.std( ) .profit.values stds2 = group_by_year.std( ) .revenue.values plot_with_std(x, y1.values, stds1, ax1, title % 'profits' , 'Profit (millions)' ) plot_with_std(x, y2.values, stds2, ax2, championship % 'revenues' , 'Acquirement (millions)' ) fig.set_size_inches( 14 , four ) fig.tight_layout( )

That's staggering, the standard deviations are huge! Some Fortune 500 companies make billions while others lose billions, and the take a chance has increased along with rising profits over the years.

Perhaps some companies perform meliorate than others; are the profits of the top 10% more or less volatile than the bottom 10%?

There are plenty of questions that we could expect into next, and information technology's easy to see how the period of working in a notebook can match ane's own thought process. For the purposes of this tutorial, we'll stop our analysis hither, but experience complimentary to continue digging into the data on your own!

This flow helped united states of america to easily investigate our data ready in ane place without context switching between applications, and our piece of work is immediately shareable and reproducible. If nosotros wished to create a more curtailed report for a particular audition, we could quickly refactor our piece of work by merging cells and removing intermediary lawmaking.

Sharing Your Notebooks

When people talk well-nigh sharing their notebooks, there are generally two paradigms they may exist considering.

Most often, individuals share the cease-event of their work, much like this article itself, which means sharing non-interactive, pre-rendered versions of their notebooks. Withal, information technology is likewise possible to collaborate on notebooks with the assist of version control systems such as Git or online platforms like Google Colab.

Before Yous Share

A shared notebook will announced exactly in the country information technology was in when you lot export or save it, including the output of whatsoever lawmaking cells. Therefore, to ensure that your notebook is share-ready, so to speak, there are a few steps yous should accept earlier sharing:

- Click "Prison cell > All Output > Clear"

- Click "Kernel > Restart & Run All"

- Look for your code cells to finish executing and check ran as expected

This will ensure your notebooks don't contain intermediary output, accept a stale land, and execute in order at the fourth dimension of sharing.

Exporting Your Notebooks

Jupyter has built-in back up for exporting to HTML and PDF also as several other formats, which you can notice from the card under "File > Download As."

If you wish to share your notebooks with a minor private group, this functionality may well exist all you need. Indeed, as many researchers in academic institutions are given some public or internal webspace, and because yous can export a notebook to an HTML file, Jupyter Notebooks can exist an especially convenient manner for researchers to share their results with their peers.

Just if sharing exported files doesn't cut it for you, there are also some immensely popular methods of sharing.ipynb files more direct on the web.

GitHub

With the number of public notebooks on GitHub exceeding ane.8 million by early 2018, it is surely the most popular independent platform for sharing Jupyter projects with the earth. GitHub has integrated support for rendering.ipynb files directly both in repositories and gists on its website. If you aren't already enlightened, GitHub is a code hosting platform for version control and collaboration for repositories created with Git. You'll need an account to utilise their services, but standard accounts are free.

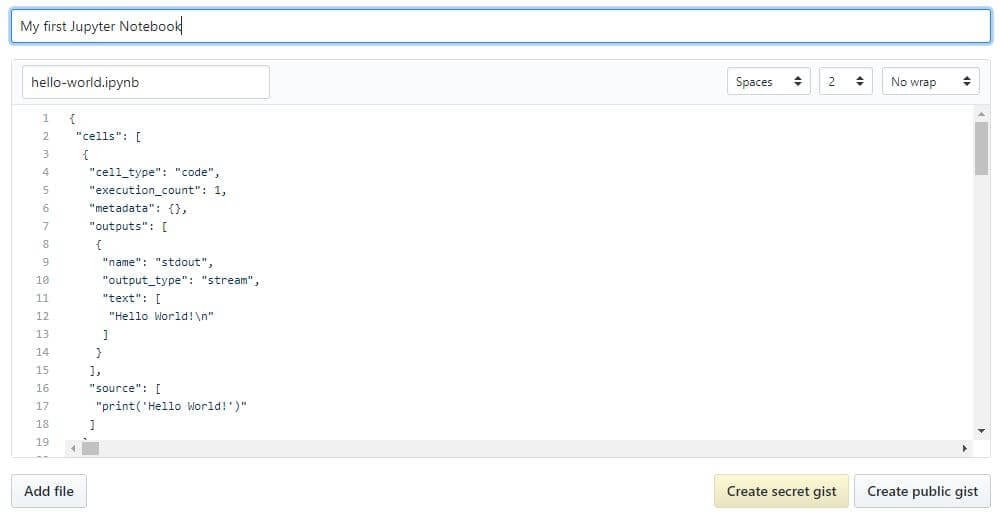

Once you have a GitHub business relationship, the easiest manner to share a notebook on GitHub doesn't really require Git at all. Since 2008, GitHub has provided its Gist service for hosting and sharing code snippets, which each become their own repository. To share a notebook using Gists:

- Sign in and navigate to gist.github.com.

- Open your

.ipynbfile in a text editor, select all and re-create the JSON inside. - Paste the notebook JSON into the gist.

- Give your Gist a filename, remembering to add

.iypnbor this volition not piece of work. - Click either "Create secret gist" or "Create public gist."

This should wait something similar the following:

If you created a public Gist, you volition now exist able to share its URL with anyone, and others will be able to fork and clone your piece of work.

Creating your ain Git repository and sharing this on GitHub is beyond the scope of this tutorial, but GitHub provides plenty of guides for you to become started on your ain.

An extra tip for those using git is to add an exception to your.gitignore for those hidden.ipynb_checkpoints directories Jupyter creates, so as not to commit checkpoint files unnecessarily to your repo.

Nbviewer

Having grown to render hundreds of thousands of notebooks every calendar week by 2015, NBViewer is the well-nigh popular notebook renderer on the web. If yous already have somewhere to host your Jupyter Notebooks online, be information technology GitHub or elsewhere, NBViewer will return your notebook and provide a shareable URL forth with it. Provided as a free service as part of Project Jupyter, it is available at nbviewer.jupyter.org.

Initially developed earlier GitHub'due south Jupyter Notebook integration, NBViewer allows anyone to enter a URL, Gist ID, or GitHub username/repo/file and it volition render the notebook every bit a webpage. A Gist'due south ID is the unique number at the end of its URL; for instance, the string of characters subsequently the last backslash inhttps://gist.github.com/username/50896401c23e0bf417e89cd57e89e1de. If you enter a GitHub username or username/repo, yous will meet a minimal file browser that lets you explore a user's repos and their contents.

The URL NBViewer displays when displaying a notebook is a abiding based on the URL of the notebook it is rendering, so yous can share this with anyone and it will work as long as the original files remain online — NBViewer doesn't cache files for very long.

If you don't like Nbviewer, there are other similar options — here's a thread with a few to consider from our customs.

Extras: Jupyter Notebook Extensions

Nosotros've already covered everything you demand to get rolling in Jupyter Notebooks.

What Are Extensions?

Extensions are precisely what they sound similar — additional features that extend Jupyter Notebooks's functionality. While a base Jupyter Notebook can do an awful lot, extensions offer some boosted features that may help with specific workflows, or that just better the user experience.

For case, one extension called "Tabular array of Contents" generates a table of contents for your notebook, to make large notebooks easier to visualize and navigate around.

Some other one, called Variable Inspector, volition show y'all the value, type, size, and shape of every variable in your notebook for easy quick reference and debugging.

Another, chosen ExecuteTime, lets you know when and for how long each cell ran — this can be specially convenient if you lot're trying to speed upwardly a snippet of your lawmaking.

These are just the tip of the iceberg; there are many extensions bachelor.

Where Can You Get Extensions?

To get the extensions, you lot need to install Nbextensions. You can do this using pip and the command line. If yous accept Anaconda, it may be better to do this through Anaconda Prompt rather than the regular control line.

Close Jupyter Notebooks, open up Anaconda Prompt, and run the following command: pip install jupyter_contrib_nbextensions && jupyter contrib nbextension install.

One time you've done that, showtime upwards a notebook and you should seen an Nbextensions tab. Clicking this tab will show you a list of available extensions. Simply tick the boxes for the extensions yous want to enable, and you're off to the races!

Installing Extensions

In one case Nbextensions itself has been installed, at that place'due south no need for additional installation of each extension. However, if you've already installed Nbextensons but aren't seeing the tab, you're not solitary. This thread on Github details some common bug and solutions.

Extras: Line Magics in Jupyter

We mentioned magic commands before when we used %matplotlib inline to make Matplotlib charts render right in our notebook. There are many other magics we can utilise, too.

How to Use Magics in Jupyter

A good offset step is to open up a Jupyter Notebook, type %lsmagic into a cell, and run the prison cell. This will output a list of the bachelor line magics and cell magics, and it volition too tell yous whether "automagic" is turned on.

- Line magics operate on a single line of a code cell

- Jail cell magics operate on the entire code jail cell in which they are called

If automagic is on, yous can run a magic simply by typing it on its own line in a code cell, and running the jail cell. If it is off, you volition need to put% before line magics and%% before cell magics to use them.

Many magics require additional input (much similar a office requires an argument) to tell them how to operate. Nosotros'll look at an instance in the next section, but you can run across the documentation for whatever magic by running it with a question mark, like so:

%matplotlib? When yous run the above cell in a notebook, a lengthy docstring volition popular up onscreen with details near how y'all can employ the magic.

A Few Useful Magic Commands

Nosotros comprehend more in the avant-garde Jupyter tutorial, but here are a few to go you started:

| Magic Command | What information technology does |

|---|---|

| %run | Runs an external script file as part of the cell being executed. For example, if %run myscript.py appears in a code cell, myscript.py will be executed by the kernel equally part of that cell. |

| %timeit | Counts loops, measures and reports how long a code cell takes to execute. |

| %writefile | Relieve the contents of a jail cell to a file. For example,%savefile myscript.py would relieve the lawmaking cell as an external file called myscript.py. |

| %store | Salve a variable for use in a unlike notebook. |

| %pwd | Impress the directory path you lot're currently working in. |

| %%javascript | Runs the cell as JavaScript code. |

There's enough more where that came from. Hop into Jupyter Notebooks and start exploring using %lsmagic!

Final Thoughts

Starting from scratch, nosotros accept come to grips with the natural workflow of Jupyter Notebooks, delved into IPython'south more advanced features, and finally learned how to share our piece of work with friends, colleagues, and the world. And we accomplished all this from a notebook itself!

It should exist clear how notebooks promote a productive working feel past reducing context switching and emulating a natural development of thoughts during a project. The power of using Jupyter Notebooks should also be axiomatic, and we covered enough of leads to become yous started exploring more advanced features in your own projects.

If you lot'd similar further inspiration for your ain Notebooks, Jupyter has put together a gallery of interesting Jupyter Notebooks that you may detect helpful and the Nbviewer homepage links to some actually fancy examples of quality notebooks.

More Peachy Jupyter Notebooks Resource

- Advanced Jupyter Notebooks Tutorial – Now that you've mastered the basics, get a Jupyter Notebooks pro with this advanced tutorial!

- 28 Jupyter Notebooks Tips, Tricks, and Shortcuts – Make yourself into a power user and increment your efficiency with these tips and tricks!

- Guided Project – Install and Learn Jupyter Notebooks – Give yourself a great foundation working with Jupyter Notebooks by working through this interactive guided projection that'll become you prepare upwards and teach yous the ropes.

Ready to go along learning?

Never wonder What should I acquire side by side? again!

On our Python for Data Science path, you'll learn:

- Data cleaning, analysis, and visualization with matplotlib and pandas

- Hypothesis testing, probability, and statistics

- Machine learning, deep learning, and decision trees

- ...and much more!

Beginning learning today with whatever of our 60+ free missions:

Tags

DOWNLOAD HERE

Posted by: baldwinwiticher97.blogspot.com

0 Komentar

Post a Comment